Connecting large language models (LLMs) to external tools has often required custom APIs and one-off connectors, making it difficult to scale AI-driven workflows. The Model Context Protocol (MCP) changes that by offering an open standard for LLMs to interact consistently with servers, databases, and productivity tools.

Adoption of MCP is accelerating across industries, with over 14,000 MCP servers and 300 client implementations already in use. Adoption is further accelerated by frameworks like FastAPI and Node.js, which are seeing sharp growth in MCP integrations.

Backed by OpenAI, Google DeepMind, and Microsoft, embedding MCP into agents, SDKs, and even Windows AI Foundry signals that the protocol is becoming foundational for practical AI systems.

This blog explores what an LLM MCP server is, how MCP servers work, the process of setting one up with real code examples, and why integration matters for developers and organizations.

Key Takeaways

- MCP servers allow LLMs to connect with external tools and databases through a unified standard.

- They simplify integration, cutting down on repetitive custom APIs and connectors.

- Backed by major players, MCP is shaping the future of scalable, production-ready AI systems.

- By streamlining workflows and enabling real-time interactions, MCP empowers teams to work more efficiently.

What are LLM MCP Servers?

An LLM MCP Server is a backend service built on the Model Context Protocol (MCP). This open standard allows large language models (LLMs) to connect with external tools, databases, and APIs in a consistent way. Instead of writing custom connectors for every new system, developers can expose functionality through an MCP server, and any LLM that understands MCP can use it.

Think of the MCP server as a bridge:

- On one side, it speaks the language of databases, file systems, or productivity tools.

- On the other hand, it communicates with the LLM using a shared protocol.

This makes integrations scalable, modular, and reusable, solving the traditional problem of fragmented APIs and one-off connectors.

A typical MCP server:

- Defines a set of capabilities (e.g., querying a database, fetching calendar events, running code).

- Exposes these capabilities through a standardized MCP interface.

- Allows LLMs to call them directly in context, without needing hardcoded integrations.

For example, instead of coding a custom API to let an LLM fetch user tasks from Notion or Trello, you can run a Notion MCP Server, and the LLM can query it seamlessly.

Also Read: 10 Best MCP Servers to Boost Cursor Productivity

Architecture of LLM MCP Servers

The Model Context Protocol (MCP) is built on a Client–Host–Server model and uses JSON-RPC 2.0 for communication. Let's break down the two main components of its architecture.

The Client–Host–Server Model (with JSON-RPC 2.0 at Its Core)

MCP follows a three-layered architecture— Host, Client, and Server. It is built upon the JSON-RPC 2.0 protocol for seamless communication.

1. Host

The Host is the LLM application or environment, such as Claude Desktop, Copilot Studio, or a custom AI agent interface, that initiates interactions with external tools. It handles:

- Creation and management of multiple MCP clients

- Orchestration of connection lifecycles and security policies

- Aggregation of context and user intent across sessions

2. Client

Each MCP Client resides within the Host and maintains a dedicated, stateful session with a specific MCP Server. Its responsibilities include:

- Protocol negotiation and capability exchange (e.g., understanding which tools or resources are available)

- Bidirectional message routing (requests, responses, notifications)

- Maintaining isolation and security between multiple server connections

3. Server

The MCP Server is the external component that exposes tools (actions), resources (data), and prompts (interaction templates) to the LLM via the client. It can connect to APIs, databases, file systems, and more, all mediated by the MCP protocol.

The Protocol & Transport Stack

The architecture is layered and modular, with two critical layers:

Data Layer (Protocol Layer)

- Utilizes JSON-RPC 2.0 for structured message exchange, including support for requests, responses, and notifications.

- Supports lifecycle management: initialization, capability discovery, sessions, and termination.

- Defines core interaction primitives: Tools, Resources, Prompts, and Notifications.

Transport Layer

- Role: Abstracts communication channels and handles authentication, framing, and messaging.

- Stdio Transport: Direct standard I/O streams for local, high-performance communication (e.g., local file access).

- Streamable HTTP Transport: Remote communication via HTTP POST requests, optionally with Server-Sent Events (SSE) for real-time updates, including support for secure authentication such as OAuth, API keys, or bearer tokens.

Together, these layers create a resilient, flexible architecture that allows LLMs to interact with external tools and resources securely and efficiently, making MCP a powerful foundation for building scalable AI workflows.

Condensed Architecture Table

How Does MCP Work?

The Model Context Protocol enables AI models to interact with the external systems they need to complete a task. Large language models can generate text and reason over data, but they cannot directly fetch information from databases, call APIs, or send emails. MCP handles this by coordinating these actions through connected tools.

Here's a step-by-step view of how it functions in practice:

- Identifying the Need

When a user makes a request, the LLM first recognizes that the task requires capabilities it doesn’t have internally, such as querying a database or interacting with a web service. - Locating Tools via MCP

The LLM, through its MCP client, checks which tools are available. These tools are defined and exposed by MCP servers, for example, a reporting service, a calendar manager, or a messaging API. - Making a Structured Request

Once the right tool is identified, the LLM creates a request in a structured format (JSON-RPC 2.0). This ensures that the MCP server understands exactly what needs to be done, like "fetch report X from Y source." - Execution by the Server

The MCP server takes the request and securely performs the actual action, whether that's running a SQL query, retrieving a file, or sending data through an API. The result is then packaged and returned to the LLM. - Chaining Multiple Actions

Often, one tool's output becomes the input for another. For example, the report pulled from a database may then be passed to an email service. MCP manages this workflow seamlessly. - Delivering the Final Answer

Once all required tools have been used, the LLM composes a natural-language response to the user, confirming the task is complete and providing the result where relevant.

By orchestrating these steps, MCP turns LLMs from passive text generators into capable assistants that can safely and efficiently interact with real-world systems.

Also Read: How to Use Claude with Notion MCP Integration

Integrating MCP with LLMs

This section explains how an LLM communicates with MCP servers via JSON-RPC, illustrates the process with a code example, and details how hosts like Claude Desktop or custom agents connect to MCP servers.

1. How LLMs Invoke MCP Servers via JSON-RPC

MCP uses JSON-RPC 2.0 as its communication backbone, a lightweight, structured protocol that enables consistency across different transport methods (like stdin/stdout or HTTP/SSE). The protocol supports standardized message types such as requests, responses, and notifications, with fields like jsonrpc, id, method, and params defining each message.

2. Code Snapshot: LLM Invokes a Tool via MCP

Here's a conceptually accurate JSON-RPC request the LLM (as MCP client) might send to an MCP server to invoke a tool called tools/call:

{

"jsonrpc": "2.0",

"id": "req1",

"method": "tools/call",

"params": {

"name": "database_query",

"arguments": {

"report": "monthly_sales"

}

}

}

A likely JSON response from the MCP server, returning structured data:

{

"jsonrpc": "2.0",

"id": "req1",

"result": {

"report_data": [

{ "month": "July", "sales": 120000 },

{ "month": "August", "sales": 134000 }

]

}

}

This pattern aligns with MCP's schema, clients issue commands (like tools/call), and servers respond in structured JSON-RPC format. It enables LLMs to synthesize user-friendly responses based on actual data.

3. Request/Response Workflow

Here's a sequential breakdown:

1. User prompt → e.g., "Show me last month's sales."

2. LLM formats the request → into JSON-RPC targeting the correct tool (database_query).

3. MCP Server executes → the tool (e.g., performs an SQL query) and replies in JSON.

4. LLM processes result → converts structured data into natural language for the final response.

Importantly, MCP supports both stdio (for local integration) and Streamable HTTP transport (for remote), ensuring flexible deployment options.

4. Configuring Hosts to Use MCP

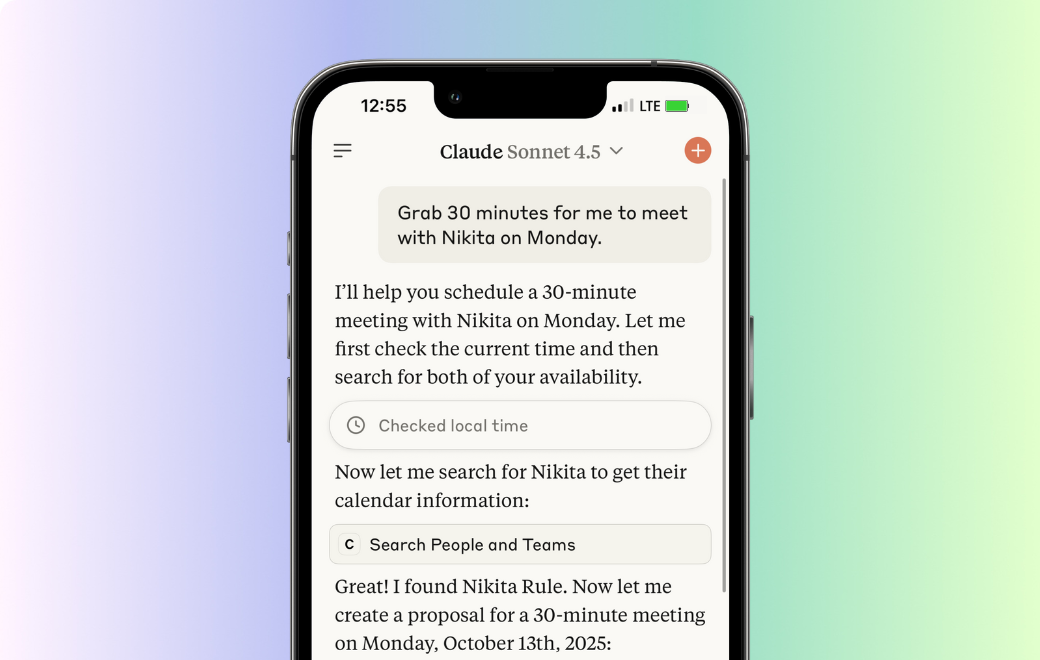

Claude Desktop

- Comes with built-in MCP client support. You simply add the server command in its configuration (e.g., path to your MCP server script), and Claude will discover the available tools automatically.

Custom AI Agents or Hosts

- You implement an MCP client:

- Define the transport (stdio or HTTP).

- Manage JSON-RPC messaging.

- Perform capability discovery (e.g., tools/list).

- Invoke tools dynamically as needed.

These capabilities are supported formally through the MCP SDKs and documented in setup guides like the MCP "Build a Server" tutorial.

Benefits of Using MCP

The Model Context Protocol (MCP) transforms how large language models (LLMs) operate by turning them into connected, action-oriented systems. Instead of being limited to static training data, LLMs gain the ability to interact with external services, giving them higher accuracy, broader utility, and easier integration into real workflows.

1. More Accurate Answers, Fewer Hallucinations

One of the biggest criticisms of LLMs is their tendency to "hallucinate", confidently providing information that sounds right but is false. MCP minimizes this by letting models fetch information directly from trusted sources (databases, APIs, knowledge bases) instead of guessing. That means when you ask about a company's latest financials or a live weather update, the LLM can query the actual data rather than rely on old training snapshots.

2. Expanding AI's Capabilities

Traditionally, LLMs were locked inside a chat window. With MCP, they gain the ability to act like digital operators. Want to update a CRM record, run a SQL query, or draft and send an email? Instead of needing multiple manual integrations, the LLM can call MCP-enabled tools to handle these tasks end-to-end. This unlocks true automation. LLMs shift from being passive Q&A bots to proactive assistants that execute business logic in real time.

3. Standardized Integrations = Faster Development

Before MCP, connecting a model to tools was messy: every pairing needed a custom connector, leading to the infamous “N × M” problem. With MCP's open standard, any compliant server can be plugged into any host with minimal work. This is the same principle that made USB-C so valuable; one connection standard, many compatible devices. For developers, that means:

- Lower costs for integration and maintenance.

- Quicker rollouts of AI-powered features.

- Easier switching between LLM vendors without breaking existing toolchains.

By providing reliable data access, expanded functionality, and simplified integrations, MCP empowers LLMs to move beyond static outputs and become practical, action-oriented AI assistants ready for real-world applications.

While MCP unlocks powerful capabilities and streamlined integrations for LLMs, connecting to external systems also introduces important security considerations that developers must address.

Security in the MCP Ecosystem

Giving LLMs the power to interact with external systems is powerful, but it also raises critical security questions. An unsafe MCP setup could expose sensitive data, run malicious tools, or lead to misuse. To prevent this, MCP implementations should be guided by a security-first mindset.

1. User Consent and Transparency

No action should happen silently in the background. Users must always know when an MCP server is being accessed and what data is being shared. Clear authorization prompts and human-friendly consent screens are vital.

2. Protecting Data Privacy

Since MCP can connect to systems containing private or sensitive information, data should only flow when explicitly approved. Strong authentication (OAuth, API keys, bearer tokens) plus encryption helps ensure information isn't leaked or intercepted.

3. Safe Use of Tools

Each MCP tool effectively gives the LLM new abilities, sometimes even to execute code. That power should be restricted:

- Only allow tools from trusted servers.

- Require user confirmation before running critical actions.

- Provide clear explanations of what each tool does.

4. Secure Output Handling

If an LLM fetches external content (like HTML or scripts), displaying it without sanitization could open the door to attacks like XSS. Any response passed back to users must be cleaned and validated before being surfaced in applications.

5. Supply Chain Integrity

MCP relies on many moving parts: hosts, servers, and external APIs. Any weak link could introduce vulnerabilities or biased behavior. Organizations must treat this as a supply chain problem, ensuring every component (and vendor) meets security standards.

6. Monitoring and Auditing

Finally, visibility is key. Robust logging of requests, responses, and tool usage lets teams detect anomalies like an unexpected data export or unauthorized query and act quickly. Continuous monitoring helps turn MCP from a risk into a trusted, auditable framework.

By following these principles, developers can confidently use MCP to extend LLM capabilities while keeping data, tools, and workflows secure. A strong security foundation ensures that powerful AI interactions remain reliable, auditable, and safe for real-world applications.

Wrapping Up

The Model Context Protocol (MCP) is still in its early stages, but it's quickly proving to be a powerful bridge between LLMs and the external tools they need to work effectively. By running MCP servers and connecting them to hosts like Claude Desktop or custom AI agents, you can move beyond static Q&A and let your AI actually interact with files, databases, APIs, and workflows in a structured, secure way.

The core idea is simple: LLMs don't have direct access to the outside world, but MCP gives them a safe and standardized way to request help. Whether it's querying a database, sending an email, or retrieving a JSON file, MCP formalizes these interactions into clear requests and responses.

As more developers build and publish MCP servers, the ecosystem will keep expanding, making it easier to equip AI systems with specialized tools without reinventing the wheel. If you're experimenting today, even a small proof-of-concept server can show how MCP turns abstract AI into a hands-on assistant that gets real work done.

FAQs

1. Is MCP the same as using plugins or extensions in AI models?

No. Plugins are usually tied to a specific platform (like ChatGPT plugins), while MCP is a general protocol that any LLM host can adopt. This makes MCP more flexible and future-proof.

2. Does MCP require internet access for every request?

Not always. MCP servers can run locally, meaning you can set up private, offline integrations (like querying local files or databases) without exposing data to the internet.

3. What are some real-world use cases of MCP?

MCP can be used for tasks like database queries, scheduling, connecting with CRM systems, monitoring cloud infrastructure, or even controlling developer tools like Git and Docker.

4. How does MCP handle authentication and security?

MCP doesn't enforce one security model; it depends on how the server and host are set up. Developers can implement authentication, permissions, and encryption to protect sensitive data.

5. Is MCP only for Claude, or can other LLMs use it too?

While Anthropic introduced MCP for Claude, the protocol itself is open and not Claude-specific. Other LLM hosts (including open-source agents and custom AI frameworks) can adopt it.

.gif)

.png)