Since its introduction in late 2024, MCP (Model Context Protocol) has gained significant traction, with over 5,000 active MCP servers listed in the Glama MCP Server Directory as of mid-2025. This surge in adoption highlights a clear demand for more efficient and context-aware AI systems.

Industry leaders, including OpenAI and Google DeepMind, have already incorporated MCP into their offerings, solidifying its place as a key player in AI development.

This blog will explore the architecture and benefits of MCP, explaining how it enables AI models to access real-time data, perform intelligent decision-making, and automate tasks with minimal manual intervention.

Key Takeaways

- MCP simplifies integration by providing a universal interface for AI models to interact with various external systems, eliminating the need for custom integrations.

- By adding real-time data and external tools to AI models, MCP enables more intelligent, goal-oriented workflows, allowing for better decision-making and task execution.

- MCP’s support for multiple transport mechanisms and robust security measures ensures that it can scale across different environments, from local development to production systems.

- The protocol’s support for modular, nested tool interactions makes it highly scalable, allowing AI systems to tackle increasingly complex tasks by dynamically discovering and using external resources.

What is MCP?

Model Context Protocol (MCP) is an open protocol developed to facilitate seamless interaction between AI models, especially large language models (LLMs), and external tools, data sources, and services.

Whether you're building an AI-powered IDE, enhancing a chat interface, or creating custom AI workflows, MCP provides a standardized way to connect LLMs with the context they need.

In the context of AI, MCP AI serves as a dynamic bridge that enables large language models (LLMs) to go beyond static outputs. It provides these models with access to external tools, data sources, and services, allowing them to make more informed decisions based on up-to-date information.

MCP vs. Traditional APIs

While APIs have been essential for connecting AI systems with external data, they often come with some key limitations:

- Custom Integrations: Each tool or service requires custom code to integrate with AI systems, creating inefficiencies.

- Lack of Context: APIs typically provide raw data or functionality, without giving AI models the context they need to understand when or why to call those functions.

MCP AI addresses these issues by:

- Streamlining Integration: Rather than coding custom solutions for each service, MCP offers a single interface that connects to many external tools.

- Enabling Contextual Interactions: With MCP, AI models can understand not just what to do, but why and how to do it, based on the context provided by the external tools and data.

- Easier Switching Between Services: Thanks to its standardized approach, switching between services and tools is much easier with MCP AI, as it eliminates the need for rewriting code for each new API.

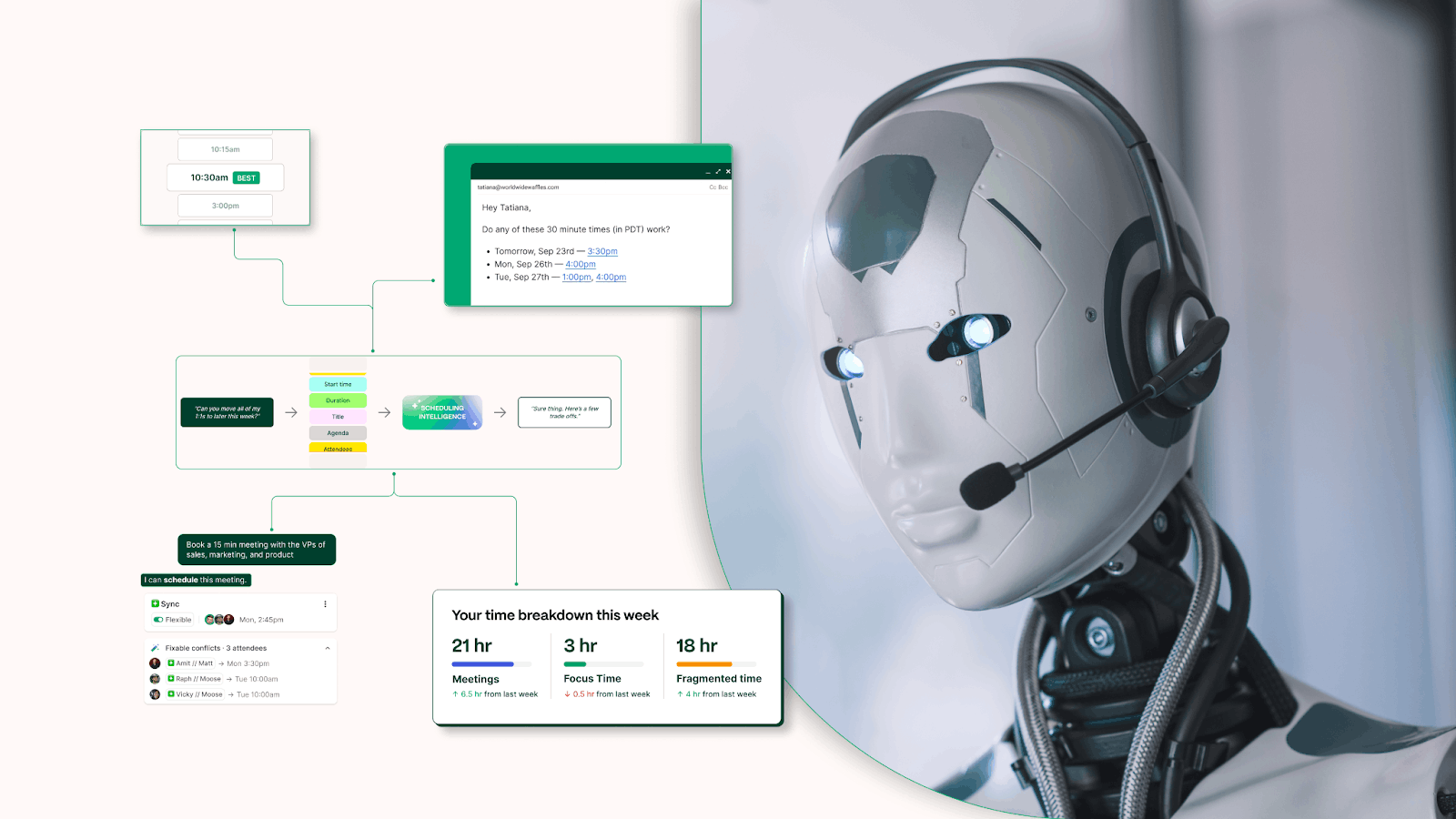

For example, if you’re using an AI scheduling tool like Clockwise, a system like MCP could, in principle, enable your AI to interact with it, helping not just to add meetings to your calendar, but to optimize them around focus time, team availability, and potentially your task priorities or goals. This kind of integration would make scheduling smarter and more personalized.

Why is MCP essential for AI Models?

AI models, especially large language models (LLMs), are powerful but limited by the data they’ve been trained on. They can’t access real-time information, which restricts their ability to handle tasks that require up-to-date context or external resources. Without this context, LLMs often fall short in practical applications that involve dynamic or real-time decision-making.

Consider the following examples:

- Drafting an Email: An LLM can generate text based on general instructions, but it can't access specific information like a customer’s previous interactions or the latest project updates.

- Data Analysis: Without the ability to query a database or fetch recent reports, an AI model can’t produce meaningful insights or analysis for ongoing projects.

- Task Automation: AI models cannot interact with tools (like scheduling software or project management tools) to take actions on behalf of the user.

Without a system like MCP, these tasks would require separate custom integrations with each data source or service, making the process fragmented, inefficient, and prone to errors.

The Fragmentation Problem

Before MCP, connecting AI models to external systems involved custom integrations for each service. This created several challenges:

- Complex and Time-Consuming: Each integration needed separate code, increasing development time and costs.

- Lack of Interoperability: Tools and services expose their data in different formats and protocols, making it difficult for AI systems to interact with them consistently.

- Maintenance Burden: Maintaining custom integrations across a growing number of tools increases complexity and risks of outdated or broken links.

MCP AI solves this problem by providing a standardized interface that allows AI models to access tools and data without needing custom code for each one, simplifying both the initial setup and ongoing maintenance.

To understand how MCP addresses these challenges, it’s important first to examine its core architecture and key components.

Core Architecture of MCP

MCP follows a client-server architecture, providing a flexible and scalable framework for AI models to interact with external tools and data sources. It allows AI models (such as large language models) to access a wide range of resources in a standardized manner.

The main components of MCP are:

1. MCP Hosts

The applications or systems that initiate interactions with MCP servers. These could be AI models like Claude, IDEs, or any AI-powered platform that needs to access external tools.

2. MCP Clients

The interface within the host that establishes a 1:1 connection with the MCP server. It handles sending requests and receiving responses between the host (AI model) and the server (external service).

3. MCP Servers

Lightweight applications that expose specific functionalities like data access, tools, or prompts. These servers act as the bridge, making external services accessible to the AI models.

Transport Mechanisms in MCP

One of the key strengths of MCP is its ability to support different transport mechanisms, enabling communication between clients and servers across various environments. This flexibility is achieved through several transport layers, ensuring seamless integration whether the communication happens locally or over the internet.

1. STDIO (Standard Input/Output)

Used for local, process-based communication. This is ideal for development and testing, where the server and client run on the same machine.

2. HTTP(S) Streaming

Used for communication between AI models and remote servers. This option supports real-time, bi-directional communication via Server-Sent Events (SSE), which is especially useful for asynchronous and continuous data flows.

3. WebSockets

An alternative for real-time, two-way communication, particularly when low-latency interactions are required between clients and servers.

These transport layers are key to MCP’s flexibility, allowing it to function across different systems and environments, whether local or distributed.

Communication Format: JSON-RPC 2.0

All communication between MCP clients and servers follows the JSON-RPC 2.0 protocol, which standardizes the format of messages exchanged. This format ensures that data is transmitted in a structured and consistent way, reducing the complexity for developers.

- Request-Response Model: The client sends a request, and the server returns a response. This is the typical interaction model for most MCP calls.

- Notifications: In addition to requests and responses, MCP supports one-way messages that don’t require a reply, allowing for more efficient communication.

The use of JSON-RPC 2.0 ensures that all data exchanged between AI systems and external tools is consistent, predictable, and easy to parse.

MCP vs Traditional APIs: What’s the Real Difference?

A common critique of MCP is that it's simply a tool, repackaged or just another layer on top of traditional APIs. MCP indeed facilitates communication between AI models and external tools just like APIs, but this comparison misses the key advantage that MCP provides.

In its simplest form, yes, you can view MCP as a wrapper around existing APIs, but the difference lies in how it changes the way AI models reason about when and why to use those tools.

Why Not Just Use REST or gRPC?

While traditional APIs like REST or gRPC can connect AI models to external services, they fall short in several ways when it comes to working with LLMs:

REST APIs

While well-defined and machine-readable, they tell you what to do but not why or when to do it. For example, a REST API might expose a /todos endpoint that lets you create a new task, but it won’t explain how that task fits into a larger goal like “organizing my day” or “focusing on deep work.”

gRPC

While gRPC supports efficient, structured communication and reflection (letting clients dynamically discover functions), it was not built with language models in mind. Its compact binary format and terse schemas make it hard for LLMs to understand and use without additional tooling or translation.

In contrast, MCP:

- Adds Context: With MCP, tool calls are wrapped in natural language prompts that describe the intent behind the action. For example, instead of simply calling add_task, MCP provides context, like: “Given the user’s goal of {user_goal}, break it down into actionable tasks and call add_task to add them to the todo list.”

- Improves Reasoning: MCP enables AI models to reason about tool usage. Instead of blindly invoking an API call, the LLM can decide when to use a tool and why it’s necessary to do so, creating more intelligent workflows.

Reflection and Dynamic Discovery with MCP

A major advantage of MCP over REST and gRPC is its ability to discover available tools and functions dynamically. This is crucial for models that need to understand which tools are relevant to the current task.

Reflection: MCP introduces reflection, the ability for models to ask the server what functions are available and how to use them. This means models can work with new tools without needing pre-coded instructions, making integrations more flexible and future-proof.

Intent-based communication: MCP enables communication where AI models not only understand how but also the why behind every tool interaction. This added layer of semantic understanding makes workflows more robust and adaptable.

MCP bridges the gap between traditional RPC and AI-driven tool use: MCP effectively serves as a bridge between traditional API systems (like REST and gRPC) and AI-driven tool usage. It incorporates the efficiency and structure of RPC systems while adding the intent and reflection capabilities necessary for AI models to reason.

MCP Enabling Agentic AI Workflows

Agentic AI refers to AI systems that can reason, plan, and take autonomous actions to achieve specific goals. MCP AI plays a pivotal role in enabling agentic workflows by connecting AI models to external tools and resources, allowing the AI to act autonomously across different domains. This turns AI from a reactive system into a proactive agent that can iterate, reflect on actions, and adjust its plans without needing to be manually instructed at every step.

How MCP Facilitates Agentic Workflows?

MCP AI enables agentic AI systems by providing a framework where AI models can reason about their actions and choose which tools to use based on the current context. Here's how it works:

- Tools to Act: MCP allows AI models to use external tools (such as APIs, databases, or file systems) to take actions, like scheduling events or fetching data, as part of a broader task.

- Memory of Past Steps: MCP can store the state of past actions and decisions, enabling the AI to learn and adjust its approach as needed.

- Iterative Reasoning: Instead of making a single decision and stopping, agentic AI systems powered by MCP can reflect on their actions and plan their next steps iteratively, just like humans.

MCP AI provides the context and guidance necessary for LLMs to reason through complex tasks and decide what to do next, making the AI system capable of autonomously navigating through tasks without direct user intervention. To illustrate the power of MCP in real-world applications, let's walk through an example of how MCP enables goal-oriented AI systems.

Read More: What is an AI Agent?

Example Workflow: Goal-Oriented AI Systems

One of the most powerful features of MCP is its ability to coordinate different tools to achieve a complex goal. Let’s demonstrate a simple agentic workflow where an LLM invokes tools from multiple MCP servers based on returned prompts.

Here are the servers we will be working with:

Todo List MCP Server

Calendar MCP Server

Agentic Workflow in Action

Now, let’s imagine we have a chatbot with access to the context provided by these MCP servers. When a user provides a high-level goal like “I want to focus on deep work today,” the MCP client coordinates a modular, multi-server workflow to fulfill the request. It packages the user message, along with tool metadata and prompt instructions from all connected MCP servers, and sends it to the LLM.

Step 1: The LLM first selects a high-level planning tool, plan_daily_tasks from the Todo List Server, which returns a prompt directing the LLM to break down the goal into actionable tasks using the add_task function.

Step 2: As tasks are created, the LLM is notified and reasons further to decide to schedule the tasks by invoking the schedule_todo_task prompt, which triggers the Calendar Server. The server responds with new guidance to use schedule_event, at which point the LLM finalizes the day’s plan with specific times.

To keep your day aligned with your goals, a scheduling assistant like Clockwise can be integrated into this workflow. Once tasks are generated and ready to be scheduled, Clockwise can help manage your calendar intelligently, finding open time slots, protecting blocks for deep work, and shifting non-urgent meetings if needed. This ensures that the plan created through the MCP system actually fits into your day without manual adjustments.

Also Read: Creating an Automated Task Using Windows Task Scheduler

MCP Nesting: Microservices for AI Agents

MCP goes beyond simple task execution by supporting nesting, the ability for MCP servers to act as clients to other MCP servers. This creates a composable ecosystem where different servers can delegate specific tasks to others, forming a larger network of connected tools.

This “microservice” approach means that one server can be responsible for a high-level task (e.g., generating a report) while other servers handle specialized tasks (e.g., fetching data, performing calculations, formatting the report). These interconnected servers can form complex workflows that mimic the behavior of a human agent.

Example:

- A Dev-Scaffolding MCP Server could act as an orchestrator, guiding an AI through the process of generating a new app feature by delegating specific tasks to other servers, like code generation, API specification, and testing.

This ability to chain servers together in a modular fashion enhances the scalability of AI-driven systems, making them more flexible and capable of handling complex, multi-step processes.

Ensuring Security and Flexibility in MCP

MCP AI ensures secure communication between clients and servers, particularly when interacting with remote services. It uses OAuth 2.1 for secure access control, allowing organizations to manage who can access sensitive data or perform actions in external systems.

- OAuth 2.1: This authorization framework provides secure, token-based access to MCP servers, enabling proper authentication across services.

- Encryption: For remote communications (via HTTP/SSE or WebSockets), MCP supports TLS/SSL encryption to secure data transmission and prevent eavesdropping.

These security features, along with the flexible transport options, make MCP a powerful, reliable, and secure way to integrate AI models with external tools and data. For MCP to be effective, certain best practices must be followed. Let’s take a look at some key guidelines for developers and system administrators

MCP AI Best Practices and Considerations

To ensure MCP servers are effective, secure, and scalable, it’s important to follow these best practices in development, security, and system management.

1. Developing MCP Servers

When implementing MCP servers, it's essential to follow best practices to ensure efficient and secure interactions:

- Tool Discovery: Ensure tools are well-defined and easy to discover with clear documentation.

- Error Handling: Use standard error codes and provide useful error messages for adaptive AI behavior.

- Response Formatting: Format responses consistently (e.g., JSON-RPC 2.0) for easy parsing by clients.

2. Security Considerations

Security is a key aspect of MCP’s architecture, especially when dealing with external systems and sensitive data. Here are some practices to ensure security:

- Transport Security: Use TLS/SSL for encrypted communication.

- Authentication and Authorization: Implement OAuth 2.1 for secure access control.

- Input Validation: Validate inputs and ensure message integrity to protect against attacks.

3. Debugging and Monitoring

Effective debugging and monitoring help maintain the health and reliability of MCP servers:

- Logging: Track significant events for troubleshooting.

- Diagnostics: Implement health checks and monitor server performance.

- Testing: Regularly test transport mechanisms to ensure functionality.

4. Scaling MCP

As the ecosystem grows, scaling MCP systems efficiently becomes crucial:

- Load Balancing: Distribute client requests across servers for better scalability.

- Failover Mechanisms: Use failover strategies to ensure high availability and reliability.

Suggested Read: AI Task Managers and Scheduling Tools for 2025

Wrapping Up

MCP is transforming the way AI models interact with external tools, data sources, and services, creating a more unified, secure, and flexible framework for AI integrations.

With its ability to streamline tool access, improve reasoning, and enable agentic workflows, MCP is making AI systems more adaptive and autonomous. As the protocol gains adoption, it will play a critical role in building the next generation of AI-driven applications.

FAQs

1. What is the Model Context Protocol (MCP)?

MCP is an open standard that allows AI models to connect to external tools, services, and data sources in a standardized way, enabling them to perform more complex, real-time tasks autonomously.

2. How does MCP differ from traditional APIs?

Unlike traditional APIs, which expose raw data or functions, MCP provides context and intent-based communication, allowing AI systems to understand why and when to use specific tools, not just how.

3. Can MCP be used for agentic AI workflows?

Yes, MCP enables agentic AI by allowing models to reason, plan, and autonomously select tools to complete tasks, creating dynamic and goal-driven workflows.

4. How does MCP support real-time communication?

MCP supports real-time communication through transport mechanisms like Streamable HTTP (SSE) and WebSockets, allowing AI systems to interact with external services instantly.

5. How does MCP ensure secure communication?

MCP uses OAuth 2.1 for secure authorization and TLS/SSL encryption for transport security, ensuring that all interactions between AI models and external systems are protected.

.gif)

.png)